Duplicate content is a hot topic in the SEO world. Many wonder if it’s bad for their website and if they should worry about it.

We will answer your questions all about duplicate content! We will discuss what it is, the different types, how to identify it, and some tips on preventing it.

What is Duplicate Content?

Duplicate content refers to substantial blocks of content within or across domains. It can be the same content appearing on multiple pages of the same website, or you can duplicate it across other websites.

It’s important to note that for content to be duplicated in numerous web pages, it doesn’t have to be a 100% match—Google still flags duplicate pages if the majority of the content is similar. Mostly, this happens when the exact same content appears on multiple URLs. It can be intentional, but it can also be unintentional.

How Does Duplicate Content Impact SEO?

Duplicate content can significantly impact your website’s SEO and user experience. When you have duplicated content, it refers to having similar or identical information on more than one page, either within your own site or across different websites.

One major issue is confusion for users. Imagine someone visiting your site and finding identical or nearly identical information on different pages. This can confuse and frustrate readers, making it challenging to determine which page to trust. It hinders their experience and could lead to a loss of credibility for your site.

From an SEO perspective, having duplicated content can cause problems with search engines. Search engines aim to provide users with the most relevant and diverse information. When the same or very similar content appears on multiple pages, it confuses search engine bots. They may struggle to identify the most authoritative or relevant page, leading to potential ranking issues.

URL structure plays a role in this. If you have different URLs for similar content, like when tracking parameters or session IDs are added, search engines might treat them as distinct pages, diluting the SEO value. This fragmentation can result in the search engine not consolidating link equity, which is crucial for determining a page’s authority.

When multiple versions of a page exist, whether due to distinct information, category pages, or variations in URL parameters, it can impact link metrics. The link equity, the value assigned to a page through inbound links, may be dispersed among these different versions. As a result, the SEO impact of those valuable links is not maximized.

Duplicate content issues also arise when content is plagiarized across different websites. Search engines penalize websites for such practices, affecting their overall search rankings. It’s crucial to ensure that the content on your website is original and not copied from other sources.

To fix duplicate content on your own site, it’s essential to use proper canonicalization, indicating the preferred version of a page to search engines. This helps consolidate link equity and avoids confusion. Additionally, regular checks using tools like Google Search Console can help identify and address duplicate content issues, ensuring that search engines index the correct and most relevant pages.

Do I get penalized for this?

You can rest easy if you’re worried about duplicate content penalties from Google. You will not get penalized for having duplicates on your site. However, it will have a more significant loss to your site, affecting your website ranking.

When search engines crawl your site, they’ll be able to tell which pieces are duplicates and which are originals. The originals will have more weight while devaluing those that are duplicates. It means that your ranking could suffer if you have a lot of duplicates on your site.

To avoid this, you should have unique and original content on your site to maximize your chances of getting a good ranking.

Types

There are a few different types of duplicate content, but they are generally into two categories: internal and external.

Internal Duplicate

One type of duplicate content is Internal Duplicate. It is where you have other pages on your site with the same or similar content. It happens when one domain creates duplicate content through multiple internal URLs.

Internal Duplicate can be problematic because it can confuse search engines and make it difficult for them to determine which version of the content is the original. It can result in lower rankings for the site as whole or individual pages.

Example

The best example of Internal Content Duplication is an e-commerce website, including the same content between products, similar product descriptions, and even their product feeds to increase the conversion rate.

URL

example.com/bags/men/backpacks/

example.com/bags/travel-rucksack/men

example.com/promo/mens-backpacks-we-like/

example.com/email-only-mens-backpacks-sale/

External Duplicate

External content is when two or more different domains have the duplicate page copy indexed by the search engines. It is where other sites are scraping your content and republishing it as their own without providing any attribution or link back to the source. It can not only hurt your traffic and search engine ranking but also result in legal action if violating the copyright of the original content.

The common causes of this are when companies have multiple domain names that they want to rank for the exact keywords, so they use the same content on all of them. Another cause can be when someone scrapes your content and puts it on their site.

To combat external content, you can use various methods, including Copyscape to find and report infringing sites, implementing a DMCA policy on your site, and using robots.txt to prevent scrapers from accessing your site’s content.

Example

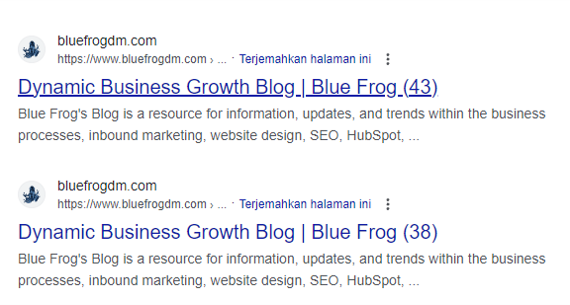

Take a look at the given example of External Duplicate Content.

Common causes of duplicate content

There are a few things you need to be aware of when it comes to duplicate content:

- URL Variations

- Printer-Friendly Version

- Session Ids

- HTTP vs. HTTPS

- WWW vs non-WWW

- Scraped Content

URL Variations

Different URL variations can be an issue in duplicate content because they can cause the same content to be indexed multiple times. If the same content is indexed numerous times, it can dilute the rankings of that content and make people find it harder.

Different things can cause URL variations. One is if there are various ways to access identical content on a website.

Another thing that can cause URL variations is if different websites have similar content. It often happens with a blog post or article, where multiple sites will republish the same article.

For example, if you have a URL in English, but the URL is different in another language. It can create issues for Google because they will not be able to find your content as easily. Also, if you use a subdomain or folder structure, ensure your URLs are consistent.

Printer-Friendly Version

The most common issue in duplicate content is accidentally creating printer-friendly versions when publishing in multiple places on your website.

When a website has multiple versions of the same content, Google will often choose the printer-friendly version as the canonical or primary version. It can cause problems if you don’t want your users directed to the printer-friendly version.

Knowing how Google chooses the canonical version of your content is essential. You can use your website’s rel=”canonical” tag to tell Google which version of the content you want them to show in their search results.

Session IDs

Session IDs are one of the causes of duplicate content, as they can create different URLs for the same content. If you have session IDs on your site, use canonical tags to point search engines to the correct URL.

HTTP vs. HTTPS

HTTP and HTTP Secure (HTTPS) are two different protocols for accessing web content. HTTP is the standard protocol most websites use, while HTTPS is a secure protocol that encrypts data before it is transmitted.

HTTP and HTTPS pages are often identical, but they are treated as separate pages by search engines. As a result, if both versions of a page are accessible to search engine crawlers, it can result in duplicate content issues.

It is essential to ensure that only a page’s version is accessible to search engine crawlers. The preferred version can be set in the HTTP headers or by using a rel=”canonical” tag on the page.

WWW vs non-WWW

WWW (World Wide Web) is the network of computers connected through the Internet. Non-WWW, or simply non-world-wide web, refers to any computer not connected to the WWW.

If you have both versions live, search engines may see this as duplicate content and penalize your rankings. Make sure to redirect any traffic from the non-WWW version to the WWW version to avoid any issues.

There are a few ways that we can still get it wrong to identify WWW and non-WWW. One way is by forgetting to include the WWW in our URLs. Another way is by not setting up our server to redirect from WWW to non-WWW or vice versa. Finally, we can make mistakes when manually coding our links and accidentally leave out the WWW.

Scraped Content

Scraped content is one type of duplicate content that can be an issue. When you publish something online, it’s not just people who might see and read it – web scrapers might be.

Scrapers are web robots or software that extract content from websites and republish it like their own. Scraped content can be plagiarized and is of lower quality content. You can also use scraping to steal sensitive information, such as login credentials or credit card numbers.

If you find that you have scraped content, take action to have it removed and prevent it from happening again. You can block scrapers with robots.txt rules or CAPTCHAs. You can also report scraped content to the original publisher for removal.

Trailing Slashes vs Non- Trailing Slashes

When it comes to duplicate content, one thing you need to be aware of is the difference between trailing and non-trailing slashes. Trailing slashes denote more content available at that URL, while non-trailing slashes denote the end of the URL. For example, if you have a page about cats and you want to include a link to it in your blog post, you would use a trailing slash: /cats/. However, if you were linking to a specific cat page, you would use a non-trailing slash: /cat/.

While this may seem a slight distinction, it can make a big difference in search engine optimization. Trailing slashes are often seen as duplicate content since they effectively point to the same page as the non-trailing slash version. As a result, your pages may be competing with each other for ranking in search engines.

To avoid this problem, you must be consistent in using trailing slashes. If you use them, make sure to use them on all your pages. Otherwise, stick with the non-trailing slash version. It will help ensure that your pages are properly indexed and ranked by search engines.

How to Identify This?

Duplicate content is a problem that can plague any website, large or small. It can be by several factors, including:

- Google Search Console

- Use Crawler Tools

- Manual Searching

Google Search Console

Learning about Google Search Console is essential because it allows you to ensure the correct set-up of the preferred domain in your site. Google Search Console reports details instances of duplicate title tags and meta descriptions. It will enable you to make changes to your site so that Google can correctly index your content.

You can also deal with duplicate content through parameter handling. Parameter handling allows you to specify how Google should handle duplicate content on your site. It is through the use of the noindex tag. By using parameter handling, you can tell Google which version of a page to index and which one to ignore.

Use Crawler Tools

Crawler tools are software that “crawl” websites and analyze their content.

When using crawler tools, you can identify duplicate content in three ways: by looking at duplicate titles, descriptions, and main body text. By identifying duplicates, you can take steps to improve your website’s content and make it more unique.

Manual Searching

One way to identify duplicate content is by manual searching. You can do a general search on Google, Yahoo, or Bing. Look for pages that have the same title or meta description. If you find any, check to see if the content is identical. If it is, then you have found some duplicate content.

You can also use a method like “site:domain.com ‘keywords/phrases'” to identify who duplicates your content. It will give you a list of websites that have copied your content.

How to Prevent Duplicate Content?

As duplicate content can be bad for SEO, there are a few things you can do to prevent it:

- 301 Redirects

- Canonical Tags

- Parameter Handling

- Meta Tagging

- Taxonomy

“301” Redirects

Setting up “301” redirects is one way to prevent duplicate content. It tells search engines how duplicated contents from another page are redirected and fed back to the main version of the page. By doing this, you’re telling Google that you want all traffic and link equity to go to one specific URL. Your content will be more focused, and you’ll avoid any penalties for duplicate content. It is a great way to keep your website’s content fresh and up-to-date.

Canonical Tags

Canonical tags are a great way to prevent duplicate content. The rel=canonical element is an HTML element that helps Google identify that the publisher owns the content.

By adding this element to your website’s pages, you can tell Google that you want them to use the Canonical URL when indexing your web page. It will ensure proper credits on your page in the search results and prevent duplicate content issues.

Parameter Handling

Parameter handling is the process of ensuring that each unique URL points to a single piece of content. It is achieved by setting up parameters in your CMS or blog software and then configuring your server to handle those parameters appropriately. It helps to keep the search engine’s databases clean and free of duplicate content, which can ultimately help improve search results.

Meta Tagging

Meta robot tags are essential for tackling duplicate content on your website. They act as clear signals to you and search engines on how specific pages should be handled, mitigating the impact of duplicated content. Key uses include:

- Preventing Indexing: Using meta robots with noindex communicates to indexing that certain pages, such as those with URL variations or tracking parameters, should not be indexed, focusing on the preferred version.

- Controlling Follow Links: Employing meta robots with nofollow manages how search engines follow links, preventing link equity distribution among duplicate content and concentrating link flow to preferred pages.

- Handling Plagiarized Content: Using meta robots with noindex helps prevent indexing of plagiarized content, which is crucial for avoiding penalties and maintaining digital marketing integrity.

- Dealing with Session IDs and Tracking Parameters: Meta Robots specifies the preferred version for pages with variations due to session IDs or tracking parameters, avoiding confusion during indexing.

- Avoiding Duplicate Content Penalty: Proper use of meta robots prevents search engines from penalizing duplicate content, ensuring a positive impact on SEO efforts.

Practically, incorporating these meta tags involves adding a concise HTML snippet to your page’s head section, effectively communicating preferences to search engine bots regarding indexing and link-following behavior.

Taxonomy

In the Content Management System (CMS), taxonomy acts like a sorting system using categories and tags to organize content efficiently. It supports navigation and content management. For combating duplicate content:

- Categories and Tags: Help avoid content duplication within the same site by categorizing information effectively.

- Search Functionality: Enhances user experience by utilizing taxonomy for relevant and distinct search results, minimizing duplicate content issues.

- URL Structure: Influences clear and unique page addresses, aiding in preventing duplicate URLs and supporting SEO efforts.

By implementing taxonomy, CMSs ensure organized content, making it easily accessible without causing confusion for search engines or users.

Conclusion

In conclusion, the impact of duplicate content on SEO and user experience is significant. It can lead to confusion for users and present challenges for search engines in determining the most relevant and authoritative content. Issues related to article URL structure, link metrics, more traffic and penalties for plagiarized content underscore the importance of addressing duplicate content.

Implementing strategies such as 301 redirects, canonical tags, parameter handling, meta-tagging, and taxonomy within Content Management Systems can help prevent and mitigate these challenges, ensuring a more organized, user-friendly, and SEO-friendly online presence.

FAQ

Will fixing duplicate content issues increase my rankings?

Fixing duplicate content can positively impact rankings by improving the clarity of your site’s content for search engines, but it’s just one factor among many.

How much duplicate content is acceptable?

Aim for unique, valuable content. While some duplication may be unavoidable, minimize it to maintain a strong online presence and SEO performance.

What is near-duplicate content?

Near-duplicate content is content that is very similar but not identical. Search engines may recognize this and assess its relevance and impact on rankings.

Is duplicate content illegal?

Duplicate content isn’t illegal, but it can affect SEO. Search engines aim to provide diverse results, so unique and high-quality content is recommended for better online visibility.